Thanks, but both of these fixes pose problems.

Adding a space after the end of bold text is necessarily to prevent weird behavior with Markdown ** spans in the middle of words - it doesn't matter in every case, but coming up with code to re-check whether a particular ** is going to behave like it's supposed to without a space would be complicated, and frankly there isn't enough content out there using our formatting flags to justify investing days and days of programming time into it; easier to just manually fix the small number of files floating around in which it's a problem.

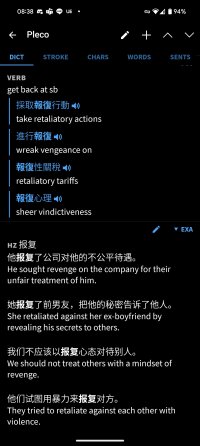

And while the popup definition does ignore spaces in some situations already (e.g. PDF / OCR files where they can occur randomly), it breaks up words on them in dictionary entries because a number of dictionaries - English-to-Chinese ones e.g. - separate words with just whitespace. I suppose in theory we could make this an option that you could configure on a dictionary-by-dictionary basis, but it's getting awfully specialized.

Also, even in this specific dictionary, it seems like in most cases you would want the reader to terminate at the end of the bold span, since that ought to be a word break, with the bolded text being a standalone word; the bolded word in that example should really be 火车站, and it should be listed under that rather than 火车. In an ambiguous segmentation case - 研究所+有 versus 研究+所有, e.g. - if just 研究 is bolded and it's followed by a space, the reader ought to favor the second segmentation.

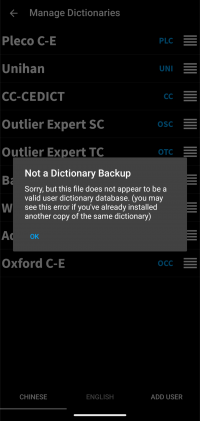

Anyway, the best solution here would probably be to export this dictionary in Markdown, remove all of those spaces after the **s, and reimport it. Or you could play around with doing it with a batch command, or simply find-and-replace the private use Pleco characters in the original dictionary with their Markdown equivalents.